- By BPR

- 0 comments

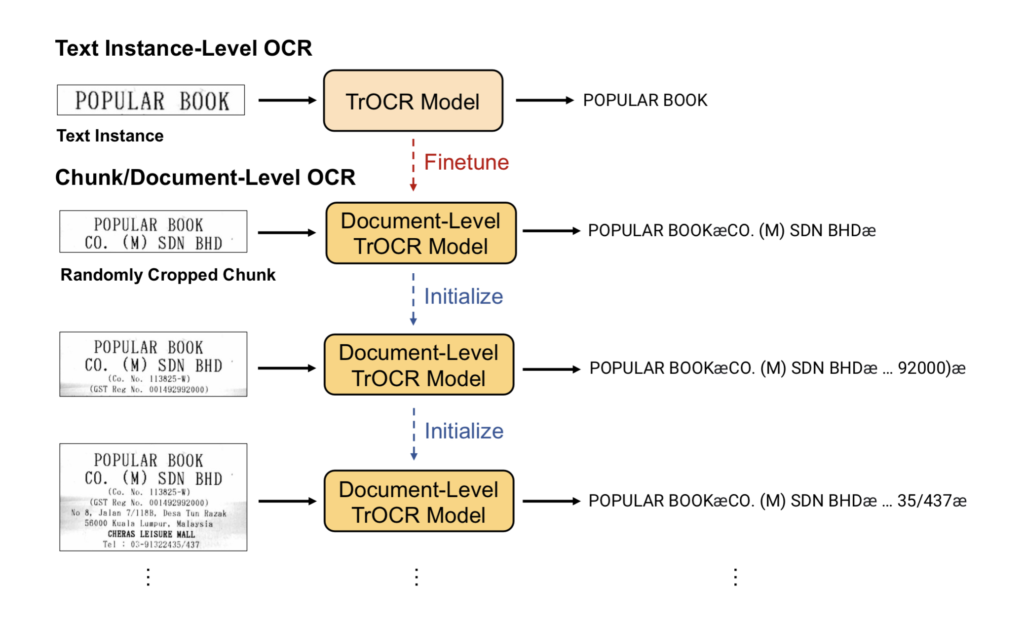

We used the SROIE English-language receipt dataset for our experiments and analyzed the effect of different image chunk sizes on the OCR error rate. This allowed the final model to take in whole images of receipts as input, without any kind of image pre-processing, such as image rectification or text detection, and output a string of text corresponding to what appeared on the receipt. The best results of 4.98% character error rate were obtained using chunks smaller than the whole image, due to the relatively small, fixed size of the “patch” used by the base TrOCR model.

We believe that both this research work and this publication are a significant contribution to the state of the art, and demonstrates how Best Path Research works with research students to develop and advance technologies that benefit both the company and the student.

Best Path Research is very happy to host interns to work on cutting-edge machine learning research and development projects and will pay a competitive salary to do so. If you are currently a student interested in pursuing an internship with us, please get in touch!

Keywords: Optical Character Recognition (OCR), PyTorch, Encoder-Decoder Transformer Models, TrOCR, End-to-end Training, Fine-tuning, Collaboration, arXiv

We used the SROIE English-language receipt dataset for our experiments and analyzed the effect of different image chunk sizes on the OCR error rate. This allowed the final model to take in whole images of receipts as input, without any kind of image pre-processing, such as image rectification or text detection, and output a string of text corresponding to what appeared on the receipt. The best results of 4.98% character error rate were obtained using chunks smaller than the whole image, due to the relatively small, fixed size of the “patch” used by the base TrOCR model.

We believe that both this research work and this publication are a significant contribution to the state of the art, and demonstrates how Best Path Research works with research students to develop and advance technologies that benefit both the company and the student.

Best Path Research is very happy to host interns to work on cutting-edge machine learning research and development projects and will pay a competitive salary to do so. If you are currently a student interested in pursuing an internship with us, please get in touch!

Keywords: Optical Character Recognition (OCR), PyTorch, Encoder-Decoder Transformer Models, TrOCR, End-to-end Training, Fine-tuning, Collaboration, arXiv